Introduction#

PyMC3 is a Python-based probabilistic programming language used to fit Bayesian models with a variety of cutting-edge algorithms including NUTS MCMC1 and ADVI2. It is not uncommon for PyMC3 users to receive the following warning:

WARNING (theano.tensor.blas): Using NumPy C-API based implementation

for BLAS functions.

where Theano3 is the autodiff engine that PyMC34 uses under-the-hood. The usual solution is to re-install the library and its dependencies following the operating system-specific instructions in the wiki.

I am working on a project using PyMC3 to fit large hierarchical models and was receiving the warning on the Linux server used to fit the full models, but not on my local MacBook Pro when experimenting with model designs (even after re-creating the conda environment). Therefore, I asked for more help on the PyMC Discourse with two questions:

- How can I get this warning to go away?

- Is the problem that Theano is warning about affecting its performance?

Thankfully, I got the first question squared away (it was a problem with my $PATH) and that enabled me to test the second.

The results of that test are presented here.

Update#

Based on a suggestion from PyMC core developer, Thomas Wiecki, on Twitter, I have since updated this analysis by fitting the same model on the same data on the same compute cluster with PyMC v4 (specifically v4.0.0b2). I left the text below primarily untouched, but the main take away was that Wiecki was correct (unsurprisingly): version 4 is much faster than version 3.

TL;DR#

- Does Theano’s warning “Using NumPy C-API based implementation for BLAS functions,” affect its performance?

- On average, sampling was faster without the warning.

- But, the rate of sampling at its fastest point was the same in each condition.

- Thus, fixing the underlying issue for the warning may increase sampling, but there is still a lot of variability due to the stochastic nature of MCMC.

- MCMC sampling PyMC v4 (currently in beta) is faster than PyMC3.

The experiment#

For my test, I fit a large hierarchical model I am working on with and without the amended $PATH variable, the latter resulting in the Theano warning.

The model is for a research project that is still hush-hush so I can’t publish the code for it just yet, but it is a negative binomial hierarchical model with thousands of parameters.

The model was fit with just under 1 million data points.

I fit four chains for each round of MCMC, each with a different random seed and 1,000 draws for tuning and 1,000 draws for sampling.

Each chain was run on a separate CPU with up to 64 GB of RAM.

Using a custom callback, I was able to log the time-point for every 5 draws from the posterior.

Results#

Resource consumption#

To begin, each model used about 53-56 GB of the available 64 GB of RAM. No real substantial difference there.

Sampling rates#

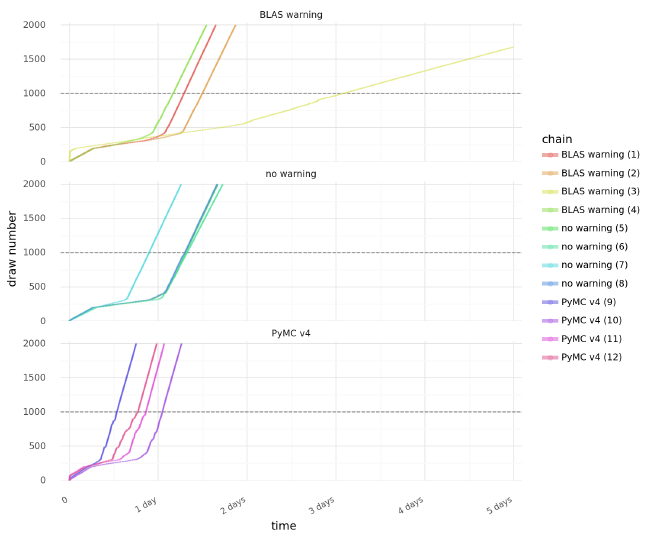

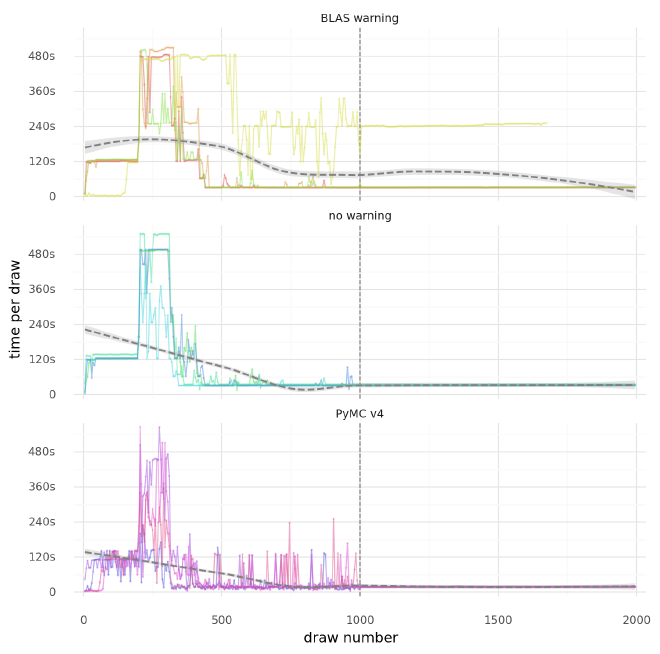

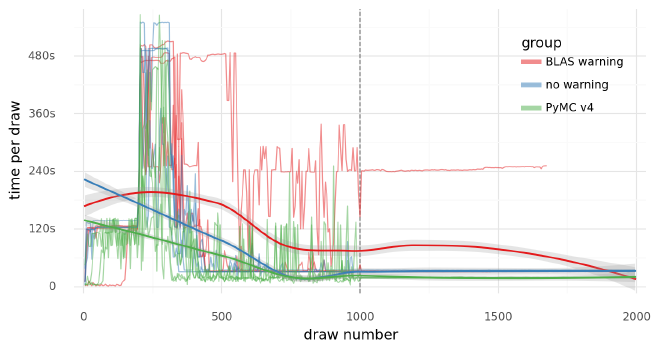

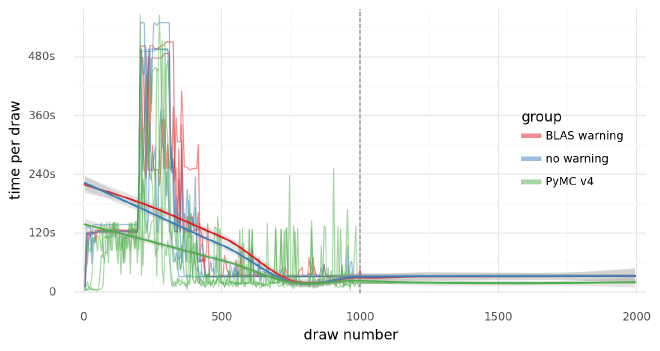

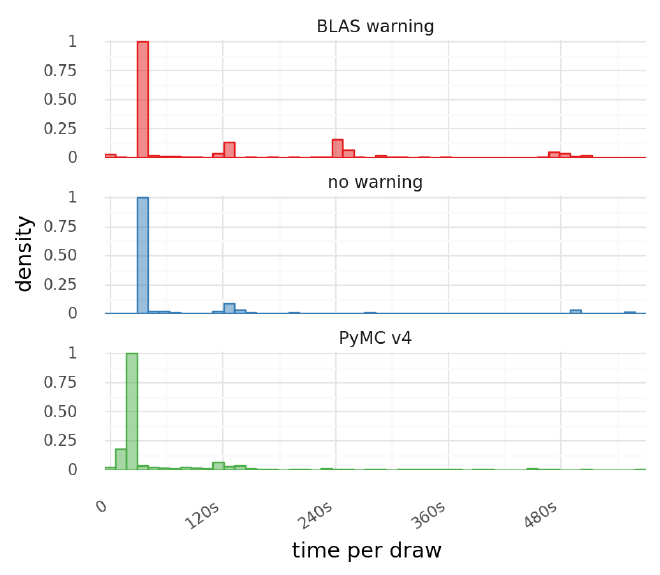

The first plot below shows the time-course for each chain, colored by the experimental condition. The plot below that displays the duration of each 5-draw interval indicating the rate of the sampling process over time for each condition followed by a plot that shows the same data on the same axes.

Each chain generally went through about 3 stages of rapid early tuning, slow tuning, and then rapid sampling post-tuning (the draws that will represent the approximate posterior distributions).

One exception to this is one of the chains from the “warning” condition that, comparatively, had an incredibly rapid early stage, prolonged slow stage, and a slower final stage than the other chains. (This chain took longer than 5 days to fit and my job timed-out before it could.) I similar result happens reliably when I use ADVI to initialize the chains (this may be a future post), so I think this is just a process of the randomness inherent to MCMC and not necessarily attributable to the Theano warning. Removing this chain shows how similar the results were between those remaining.

The sampling durations in each condition are also plotted as histograms (below).

Summary table#

Finally, the following is a table summarizing the sampling rates for each chain. Note that chain #2 of the “warning” condition never finished (>5 days).

Note that the average draw rate (in minutes per 5 draws) is the same for all chains during the “sampling” stage (except for the outlier chain).

| total (hr.) | draw rate (min.) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| duration | mean | std | min | 25% | 50% | 75% | max | |||

| chain group | chain | stage | ||||||||

| BLAS warning | 0 | tune | 30.9 | 9.3 | 11.2 | 0.9 | 2.5 | 2.6 | 10.1 | 40.5 |

| sampling | 39.5 | 2.6 | 0.0 | 2.5 | 2.5 | 2.6 | 2.6 | 2.6 | ||

| 1 | tune | 35.9 | 10.8 | 12.5 | 0.8 | 2.7 | 2.7 | 10.5 | 42.6 | |

| sampling | 44.9 | 2.7 | 0.0 | 2.6 | 2.7 | 2.7 | 2.7 | 2.8 | ||

| 2 | tune | 73.7 | 22.2 | 14.3 | 0.1 | 10.0 | 20.2 | 39.2 | 40.5 | |

| sampling | 119.8 | 20.3 | 0.8 | 12.1 | 20.2 | 20.2 | 20.6 | 21.0 | ||

| 3 | tune | 28.0 | 8.4 | 8.7 | 0.8 | 2.7 | 3.2 | 10.6 | 42.0 | |

| sampling | 37.0 | 2.7 | 0.1 | 2.7 | 2.7 | 2.7 | 2.7 | 3.8 | ||

| no warning | 4 | tune | 31.4 | 9.4 | 11.9 | 0.8 | 2.6 | 2.6 | 10.2 | 41.3 |

| sampling | 40.0 | 2.6 | 0.0 | 2.6 | 2.6 | 2.6 | 2.6 | 2.6 | ||

| 5 | tune | 31.7 | 9.6 | 12.6 | 1.4 | 2.9 | 2.9 | 11.4 | 45.9 | |

| sampling | 41.4 | 2.9 | 0.0 | 2.8 | 2.9 | 2.9 | 2.9 | 2.9 | ||

| 6 | tune | 21.5 | 6.5 | 7.0 | 0.8 | 2.6 | 2.6 | 10.2 | 41.2 | |

| sampling | 30.2 | 2.6 | 0.0 | 2.6 | 2.6 | 2.6 | 2.6 | 2.6 | ||

| 7 | tune | 31.1 | 9.4 | 11.6 | 0.2 | 2.6 | 2.6 | 10.3 | 41.4 | |

| sampling | 39.8 | 2.6 | 0.0 | 2.6 | 2.6 | 2.6 | 2.6 | 2.6 | ||

| PyMC v4 | 8 | tune | 12.8 | 3.8 | 4.1 | 0.4 | 1.3 | 1.5 | 6.1 | 19.2 |

| sampling | 18.0 | 1.6 | 0.1 | 1.5 | 1.6 | 1.6 | 1.6 | 2.2 | ||

| 9 | tune | 25.1 | 7.6 | 10.2 | 0.3 | 1.5 | 2.6 | 9.2 | 47.1 | |

| sampling | 30.3 | 1.6 | 0.0 | 1.5 | 1.5 | 1.6 | 1.6 | 1.6 | ||

| 10 | tune | 20.6 | 6.2 | 8.7 | 0.2 | 1.5 | 1.8 | 9.5 | 47.2 | |

| sampling | 25.6 | 1.5 | 0.0 | 1.2 | 1.5 | 1.5 | 1.5 | 1.5 | ||

| 11 | tune | 18.5 | 5.6 | 6.6 | 0.2 | 1.5 | 1.6 | 9.4 | 31.1 | |

| sampling | 23.6 | 1.5 | 0.0 | 1.5 | 1.5 | 1.5 | 1.5 | 1.5 | ||

Conclusion#

My understanding of the inner-workings of PyMC and Theano is limited, so it is impossible for me to provide a confident final conclusion. From this experiment, it seems like PyMC3 performed equivalently with and without the warning message. That said, it is probably best to address the underlying issue to ensure optimal PyMC3 performance and behavior.

Update with addition of PyMC v4: PyMC v4 samples faster during both the tuning and sampling stages of MCMC. In addition, version 4 tends to have fewer long-duration steps in the tuning process, dramatically cutting down on overall runtime.

Featured image source: “Theano – A Woman Who Ruled the Pythagoras School”, Ancient Origins.

Hoffman, Matthew D., and Andrew Gelman. 2011. “The No-U-Turn Sampler: Adaptively Setting Path Lengths in Hamiltonian Monte Carlo.” arXiv [stat.CO]. arXiv. http://arxiv.org/abs/1111.4246. ↩︎

Kucukelbir, Alp, Dustin Tran, Rajesh Ranganath, Andrew Gelman, and David M. Blei. 2017. “Automatic Differentiation Variational Inference.” Journal of Machine Learning Research: JMLR 18 (14): 1–45. ↩︎

Theano was the wife (and follower) of the famous mathematician Pythagoras and Aesara was their daughter and followed in their occupational and religious footsteps. ↩︎

The next version of PyMC3 will be called “PyMC” and use a fork of Theano called Aesara. ↩︎